The marriage of empiricism and rationality

Mainstream epistemology—the theory of how we can know things—has two principles: empiricism and rationality. “Empiricism” means that knowledge is based on perception; “rationality” means that knowledge is based on sound reasoning.

Not so long ago, there were believed to be other bases for reliable knowledge: tradition, scripture, and intuition. All five of these competed with each of the others. So rationalists and empiricists were enemies, and it seemed both could not be right. However, in the late 1800s, rationalism and empiricism allied, and killed the other three. The two joined in the holy matrimony of the Scientific Worldview.

This marriage seems happy enough. Indeed, it’s obvious to any working scientist that both are indispensable. It is little-noticed that no contract was drawn up, and the terms of the union are undefined. Perhaps this is a motivated ignorance; why risk upset?

Dropping the flowery metaphors: we have no coherent explanation for how rationality and empiricism relate to each other—even though philosophers have worked hard to find one for several centuries. I find this mysterious and exciting, because I suspect that neither rationality nor empiricism is a good account of knowledge, separately or combined. That opens the possibility for alternative, more accurate explanations.

That is a big topic. This page concentrates on one small aspect: the relationships among mathematical logic, probability theory, and rationality in general. (A terminological point: confusingly, reasoning and empiricism are now often referred to together as “rationality.”) Mathematical logic is the modern, formal version of rationality in the narrow sense, and probability theory is the modern, formal version of empiricism.

It is sometimes said that probability theory extends mathematical logic from dealing with just “true” and “false” to a continuous scale of uncertainty. Some have said that this is proven by Cox’s Theorem. These are both misunderstandings, as I’ll explain below. In short: logic is capable of expressing complex relationships among different objects, and probability theory is not.

A more serious corollary misunderstanding is that probability theory is a complete theory of formal rationality; or even of rationality in general; or even of epistemology.

In fact, logic can do things probability theory can’t. However, despite much hard work, no known formalism completely unifies the two! Even at the mathematical level, the marriage of rationality and empiricism has never been fully consummated.

Furthermore, probability theory plus logic cannot exhaust rationality—much less add up to a complete epistemology. I’ll end with a very handwavey sketch of how we might make progress toward one.

Plan

I hope to dispel misunderstandings by comparing the expressive power of three formal systems. In reverse order:

- Predicate calculus—the usual meaning of “logic”—can describe relationships among multiple objects.

- Aristotelian logic can describe only the properties of a single object.

- Propositional calculus cannot talk about objects at all.

Probability theory can be viewed as an extension of propositional calculus. Propositional calculus is described as “a logic,” for historical reasons, but it is not what is usually meant by “logic.”

Cox’s Theorem concerns only propositional calculus. Further, it was well-known long before Cox that probability theory does extend propositional calculus.

Informally, probability theory can extend Aristotelian logic as well. This is usually unproblematic in practice, although it squicks logicians a bit.

Probability theory by itself cannot express relationships among multiple objects, as predicate calculus (i.e. “logic”) can. The two systems are typically combined in scientific practice. In specific cases, this is intuitive and unproblematic. In general, it is difficult and an open research area.

These misunderstandings probably originate with E. T. Jaynes. More about that toward the end of this page.

“Expressive power” is about what a system allows you to say. A possible objection to probability theory as an account of rationality is that it is too expensive to compute with. This essay is not about that problem. Even if all computation were free, probability theory could not reason about relationships, because it can’t even represent them.1

Propositional calculus

Propositional calculus is the mathematics of “and,” “or,” and “not.” (“Calculus” here has nothing to do with the common meaning of “calculus” as the mathematics of continuous change: derivatives and integrals.) There is not much to say about “and,” “or,” and “not,” and not much you can do with them. (You can skip to the next section if you know this stuff.)

A “proposition,” for propositional calculus, is something that is either true or false. As far as this system is concerned, there is nothing more to a proposition than that. Particularly, propositional calculus is never concerned with what a proposition is about. It is only concerned with what happens when you combine propositions with “and,” “or,” and “not.” It symbolizes these three with ∧, ∨, and ¬, respectively.

So let’s consider some particular proposition, which we’ll call p. All we can say about it is that it is either true or false. Regarding ¬, we can say that if p is true, then ¬p is false. Also, if p is false, then ¬p is true. Thus endeth the disquisition upon negation. (Perhaps you are not enthralled so far.)

Consider two propositions, p and q. If both p and q are true, then p∧q is true. If either of them is false, then p∧q is false. (Surprised?) That’s all that can be said about ∧.

You’ll be shocked to learn that if either p or q is true, then p∨q is true; but if both of them are false, then p∨q is false.

From these profound insights, we can prove some important theorems. For example, it can be shown that p∧¬p is false, regardless of whether p is true or false. (You may wish to check this carefully.)

Likewise, it can be shown that p∨¬p is true, regardless of whether p is true or false. In other exciting news, it turns out that p∧p is true if p is true, and false if not. Moreover, p∧q is true if, and only if, q∧p is true!

There are a half dozen banalities of this sort in total; and they exhaust the expressive power of propositional calculus.

Propositional calculus and “logic”

Propositional calculus is extremely important; it’s the rock bottom foundation for all of mathematics. But by itself, it’s also extremely weak. It’s useful to understand only for its role in more powerful systems.

Propositional calculus can’t talk about anything. As far as it knows, propositions are just true or false; they have no meaning beyond that.

The grade school example of logic is this syllogism:

(a) All men are mortal.

(b) Socrates is a man.

Therefore:

(c) Socrates is mortal.

We can capture the “therefore” in propositional calculus.2 Its symbol is →. It turns out that p→q is equivalent to (¬p)∨q. (You may need to think that through if you aren’t familiar with it. Consider each of the cases of p and q being true and false. If p is true, then ¬p is false, so q has to be true to make (¬p)∨q true. If p isn’t true, then ¬p is true, and then it doesn’t matter whether q is true or false.)

So we could try to write the syllogism, in propositional calculus, as (a∧b)→c. But this is not a valid deduction in propositional calculus. As far as it knows, a, b, and c are all independent, because it has no idea what any of them mean. The fact that a and b are both about men, and b and c are both about Socrates, is beyond the system’s ken.

For this calculus, propositions are “atomic” or “opaque.” You aren’t allowed to look at their internal structure. Propositional calculus has no numbers; no individuals, no properties, no relationships; nothing except true, false, and, or, and not. Most important, it has no way of reasoning from generalizations (“all men are mortal”) to specifics (“Socrates is mortal”).3

For historical reasons, propositional calculus is described as “a logic,” and is sometimes called “propositional logic.” But it is not what mathematicians or philosophers mean when they talk about “logic.” They mean predicate calculus, a different and immensely more powerful system.

Probability theory extends propositional calculus

Probability theory can easily be seen as an extension of propositional calculus to deal with uncertainty. In fact, the axiomatic foundations of the two were developed in concert, in the mid–1800s, by Boole and Venn among others. It was obvious then that the two are closely linked.

This section sketches the way probability theory extends propositional calculus, in case you are unfamiliar with the point. You can skip ahead if you already know this.

Probabilities are numbers from 0 to 1, where 1 means “certainly yes,” 0 means “certainly no,” and numbers in between represent degrees of uncertainty. When probability theory is applied in the real world, probabilities are assigned to various sorts of things, like hypotheses and events; but the math doesn’t specify that. As far as the math is concerned, there are just various thingies that have probabilities, and it has nothing to say about the thingies themselves. Just as in propositional calculus, probability theory doesn’t let you “look inside” them. In fact, one common way of applying probability theory is to say that the thingies are, indeed, propositions.

An event is something that either happens, or doesn’t. If e is an event, we symbolize its probability as P(e). We can symbolize the other possibility—that e doesn’t happen—as ¬e. It is certain that either e or ¬e will happen, so P(e) + P(¬e) = 1. Rearranging, P(¬e) = 1 – P(e). If e is certain, then P(e) = 1, so P(¬e) = 0, i.e. certainly false.

Suppose f is another event, which can happen only if e doesn’t happen. For example, if e is a die coming up 3, and f is the die coming up 4, then they are mutually exclusive. In that case, P(e∨f), the probability that the die comes up either 3 or 4, is P(e)+P(f). (For a six-sided die, that is 1/6+1/6=1/3.)

Suppose two events are “independent”: approximately, there is no causal connection between them. For example, two dice rolling should not affect each other. Let’s say e is the first die coming up 3, and g is the second one coming up 3. In that case, the probability that they will both come up 3, P(e∧g) = P(e) × P(g), which is 1/6 × 1/6 = 1/36.4

What is the probability that at least one die comes up 3? These are not mutually exclusive, so it is not simply the sum. It is P(e∨g) = P(e) + P(g) – P(e∧g), or 1/3 – 1/36. “At least one” includes “both,” and we have to subtract that out.

So, taken together, we see a simple and intuitive connection between probability and the operations of propositional calculus.

Cox’s Theorem

Cox’s Theorem concerns this relationship between propositional calculus and probability theory. It is irrelevant to the question “does probability theory extend logic” because:

- Propositional calculus is not “logic” as that is usually understood.

- It was well-known for decades before Cox that probability theory does extend propositional calculus.5

So you can probably just skip the rest of this section. However, since some people have misunderstood Cox’s Theorem as proving that probability theory includes all of logic, and is therefore a complete theory of rationality, I’ll say a little more about it.

Cox’s Theorem was an attempt to answer the informal question: Is there something like probability theory, but not quite the same?

This sort of question is often paradigm-breaking in mathematics. Is there something like Euclid’s geometry, but not quite the same? Yes, there are non-Euclidian geometries; and they turn out to be the mathematical key to Einsteinian relativity. Are there things that are like real numbers but not quite the same? Yes; complex numbers, for example, which have endless applications in pure mathematics, physics, and engineering.

So if there were something like probability theory, but not the same, we’d want to know about it. It might be extremely useful, in unexpected ways. Or, it might just be a mathematical curiosity.

Alternatively, if we knew that there is nothing similar to probability theory, then we’d have more confidence that using it is justified. We know probability theory often gives good results; if there’s nothing else like it, then we don’t have to worry that some other method would give better ones.

To answer the question, we need to say precisely what “like” would mean. (Probate law is “like” probability theory in some ways, but not ones we care about.) One approach is to define “what probability theory is like”; and then we can ask “are there other things that are like that?” So, what properties of probability theory are important enough that anything “like” it ought to have them?

This is not a mathematical question; it’s just a matter of opinion. Different people have different, reasonable opinions about what’s important about probability theory. The answer to “is there anything else like probability theory?” comes out differently depending on what properties you think something else would have to have to count as “like.”

When Cox was writing, in the 1940s, definitely nothing “like” probability theory was known. So he wanted to prove that indeed this would remain true.

Probably everyone would agree that anything “like” probability theory has to be a method for reasoning about uncertainty. So Cox started by asking: what would everyone intuitively agree has to be true about any sane method? For one thing, everyone would agree that in cases of certainty, any method ought to accord with propositional calculus; and that in cases of near-certainty, it ought to nearly accord. So, for instance, if q is nearly certain, then q∨p should also be nearly certain.

So Cox’s plan was to start with a handful of such intuitions, and show that probability theory is the only system that satisfies them. And, he thought he had done that.

Unfortunately, there are technical, philosophical, and practical problems with his result. I will mention some of these, but only briefly—because the whole topic is irrelevant to my point.

Technically, Cox’s proof was simply wrong, and the “Theorem” as stated is not true. Various technical fixes have been proposed, yielding revised, accurate theorems with similar content.

Philosophically, it is unclear that all his requirements were intuitive. For example, the proof requires negation to be a twice-differentiable function. Some authors do not consider twice-differentiability an “intuitive” property of negation; others do.

It is also controversial what the (fixed-up) mathematical result means philosophically. Whereas in 1946, when Cox published his Theorem, there clearly was nothing else like probability theory, there are now a variety of related mathematical systems for reasoning about uncertainty.

These share a common motivation. Probability theory doesn’t work when you have inadequate information. Implicitly, it demands that you always have complete confidence in your probability estimate,6 like maybe 0.5279371, whereas in fact often you just have no clue. Or you might say “well, it seems the probability is at least 0.3, and not more than 0.8, but any guess more definite than that would be meaningless.”

So various systems try to capture this intuition: sometimes a specific numerical probability is unavailable, but you can still do some reasoning anyway. These systems coincide with probability theory in cases where you are confident of your probability estimate, and extend it to handle cases where you aren’t.

Some advocates of probability theory point to Cox’s Theorem as reason to dismiss alternatives. Is that justified? It is not a mathematical reason; the alternatives are valid as mathematical systems. It’s a philosophical claim that depends on intuitions that reasonable people disagree about.

Practically, what we want to know is: are there times when we should use one of the alternatives, instead of probability theory?

This is an empirical, engineering question, not a mathematical one. I have no expertise in this area, but from casual reading, the answer seems to be “no.” Successful applications seem to be rare or non-existent. When probability theory doesn’t work, the other leading brands don’t work either. They just add complexity.

So in a practical sense, I think Cox was probably right. As far as we know, there’s nothing similar to probability theory that’s also useful in practice.7

Using probability in the real world

Probability theory is just math; but we care about it because it’s useful when applied to real-world problems. Originally, for example, it was developed to analyze gambling games.

Suppose you roll a die, and you believe it is fair. Then you believe that the probability it will come up as a three is 1/6. You could write this as P(3) = 1/6.

People write things like that all the time, and it is totally legitimate. It might make you slightly uneasy, however. 3 is a number. It’s abstract. Do numbers have probabilities? Not as such. You assign 3 a probability, in this particular context. In a different context—for example, rolling an icosahedral die—it would have a different probability.

There is always a process of intelligent interpretation between a mathematical statement and the real world. This interpretation gives mathematics “aboutness.” What, in the real world, do the mathematical entities refer to? Here, you understand that “3” corresponds to whether a die has come up three or not.

This interpretation is not merely mental; it is a bodily process of action and perception. You have learned to roll a die in a way that makes its outcome sufficiently random8; and you can count the number of pips on a die face.9 The usefulness of any mathematics depends on such interpretive processes working reliably.

“3” is ambiguous, as we saw. In this simple case, you know what it refers to, and won’t get confused. But someone else might read your observation that “P(3) = 1/6” and try to apply it to an icosahedral die; in which case they might make bad bets. So when communicating with others, or in more complicated cases where you might lose track yourself, you would want to be more explicit.

What exactly did “P(3) = 1/6” mean? Maybe it’s “this particular die, now rolling, has probability 1/6 of coming up with a three.” Or maybe you have a more general belief: “any fair six-sided die has probability 1/6 of coming up three any time it is rolled.” So then, to avoid ambiguity, you could write:

P(any fair six-sided die coming up three any time it is rolled) = 1/6

This has a high ratio of English to math, however. English is notoriously ambiguous. Quite possibly there’s still room for misinterpretation. It might be better to write this in a way that is purely mathematical, so there’s no ambiguity left.

Modern mathematical logic was developed as a way to do exactly that. Logicians wanted a systematic way of turning English statements into unambiguous mathematical ones. The system they invented is called predicate calculus.

It is now the meta-language of mathematics. All math can (in principle) be expressed in predicate calculus. It is immensely more powerful than propositional calculus. Its key trick—which is necessary to express generalizations like “any six-sided die”—is called logical quantification.

But before we get to that, let’s look at a simpler system, Aristotelian logic; and look at how probability theory can more-or-less handle Aristotelian generalization.

Implicit generalizations

Aristotelian logic allows us to make general statements about the properties of particular, single objects. The standard example is “all men are mortal.” The Aristotelian syllogism allows us to reason from general to specific statements. For example, if we know that Socrates is a specific man, then we can conclude that Socrates is mortal.

How does this relate to probability theory? “All men are mortal” is usually considered certain, so it’s not a good example for answering that.

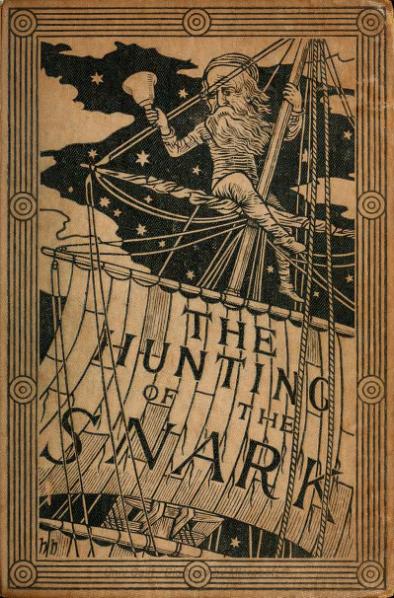

Instead: the logician C. L. Dodgson demonstrated that some snarks—not all—are boojums. A probabilist may write this generalization as a conditional probability:

P(boojum|snark) = 0.4

The vertical bar | is read “given”. The statement is understood as something like “if you see a snark, the probability that it is a boojum is 0.4.” Or, “the probability of boojumness given snarkness is 0.4.”

Mathematicians would call this “an abuse of notation”; but if it is interpreted intelligently in context, it’s unproblematic. Still, it’s rather queer. What exactly are “snark” and “boojum” supposed to mean here?

A probability textbook will tell you that the things that get probabilities are events or hypotheses or outcomes or propositions. (Different authors disagree.) We could legitimately say

P(Edward is a boojum|Edward is a snark) = 0.4

because “Edward is a snark” is a proposition. But this is a specific fact, and we want to express a generalization about snarks broadly.

“Snark” and “boojum” refer to categories, or properties; and those don’t get probabilities. In this context, they are meant to be read as something like “this thing is a snark” (or boojum). A more pedantically correct statement would be:

P(it is a boojum|something is a snark) = 0.4

But again this doesn’t look like math; and what does “this” mean?10 How are we sure that the “something” that is a snark refers to the same thing as the “it” that might be a boojum? Someone might read this, observe that Carlotta is a snark, and conclude that there’s a 0.4 probability that Edward is a boojum. That is not a deduction the equation was supposed to allow. We’re depending on intelligent interpretation. We’ll see that this could become arbitrarily difficult, and therefore unreliable, in more complex cases.

Formally, a Bayesian network is a set of specific conditional probabilities. In practice, by abuse of notation and intelligent application, it is used to express a set of generalizations. For example:

P(snark|hairy) = 0.01

P(boojum|snark) = 0.4

P(lethal|boojum) = 0.9

Implicitly, “hairy,” “snark,” “boojum,” and “lethal” are all meant to refer to the same creature—whichever that happens to be in the situation at hand.

So long as this implicit reference works out, probability theory can in practice capture Aristotelian logic: generalizations about properties of a single object.11 Mathematical formulations of probability theory don’t support that, but there’s usually no practical problem.

However, Aristotelian logic is weak; it doesn’t let you talk about relationships among objects. Let’s shine some light on an example.

There’s other scary monsters out there. Grues, for example. If you explore a cave in the dark, you are likely to be eaten by one.

P(grue|cave) = 0.7

P(grue|dark) = 0.8

P(eaten|grue) = 0.9

This example is different. “Cave” does not refer to the same thing as “grue”! Again, with intelligent interpretation, this may not be a problem. The statements were intended to mean something like:

P(there is some grue nearby|I am in cave) = 0.7

P(there is some grue nearby|the cave is dark) = 0.8

P(that nearby grue will eat me|there is a grue nearby) = 0.9

English is carrying too much of the load here, however. It’s someone’s job to keep track of grues and caves and “I”s and make sure they are all in the right relationships. The conditional probability formalism is not able to do that.12

Logical quantification

Predicate calculus has an elegant, general way of talking about relationships: logical quantifiers. They are the solution here.

A simplest use cleans up the vagueness of “P(boojum|snark) = 0.4.” We’re supposed to interpret that as: anything that is a snark has probability 0.4 of being a boojum. In predicate calculus,

∀x: P(boojum(x)|snark(x)) = 0.4

“∀” is read “for all,” and the thing that comes after it is a place holder variable that could stand for anything. So this means “for anything at all—call it x—the probability that x is a boojum, given that x is snark, is 0.4.”

So ∀ is doing two pieces of work for us. One is that it lets us make an explicitly general statement. “P(boojum|snark) = 0.4” was implicitly meant to apply to all monsters, but that worked only “by abuse of notation”; you can’t actually do that in formal probability theory.

∀’s second trick is to allow us to reason from generalities to specifics—the Aristotelian syllogism. This is done by “binding” the variable x to a particular value, such as Edward. From

∀x: P(boojum(x)|snark(x)) = 0.4

we are allowed to logically deduce

P(boojum(Edward)|snark(Edward)) = 0.4

(This operation is called “instantiation” of the general statement.)

There are two logical quantifiers, ∀ and ∃. The second one gets read “there exists.” For example,

∃x: father(x, Edward)

“There exists some x such that x is Edward’s father”—or, more naturally, “Something is Edward’s father,” or “Edward has a father.”

The power of predicate calculus comes when we combine two or more quantifiers in a single statement, one nested inside another.13 Because each quantifier has its own variable, we can use them to relate two or more things.

Fatherhood is a relationship. Every vertebrate has a father.14

∀x: vertebrate(x) → (∃y: father(y, x))

From this, if we also know that Edward is a vertebrate, we can deduce that he has a father.15

Probably, every vertebrate has exactly one father:

∀x: vertebrate(x) → (∃y: father(y, x) ∧ (∀z: father(z, x) → z=y))

“If x is a vertebrate, then it has some father (y), and if anything (z) is x’s father, it’s actually just y.”

Do you believe that? I was sure of it until I thought a bit. If two sperm fertilize an egg at almost the same instant, maybe it’s possible (if very unlikely) that during the first mitosis, the excess paternal chromosomes will get randomly dumped, leaving daughter cells with a normal karyotype composed of a mixture of chromosomes from the two fathers. For all I know, this does happen occasionally in fish or something. Or maybe it just can’t happen, because vertebrate eggs have a reliable mechanism to detect a wrong karyotype, and abort.16

We’d like to bring evidence to bear—evidence that (like all real-world evidence) cannot be certain. Suppose we sequence DNA from some monsters and find that it sure looks like Arthur and Harold are both fathers of Edward:

P(father(Arthur, Edward) | experiment) = 0.99

P(father(Harold, Edward) | experiment) = 0.99

P(Arthur = Harold | observations) = 0.01

This should update our belief that every vertebrate has only one father. How?

Here we would be reasoning from specifics to generalities (whereas the implicit instantiation trick of Bayesian networks allows us to reason from generalities to specifics). This is outside the scope of probability theory.

Statistical inference is based on probability theory, and enables reasoning from specifics to generalities in some cases. It is not just probability theory, though; and it handles only simple, restricted cases; and it doesn’t relate to predicate calculus in any straightforward way.

Back to the cave:

∀x: ∀y: P(∃z: grue(z) ∧ near(z,x) | person(x) ∧ cave(y) ∧ in(x, y)) = 0.8

“If a person (x) is in a cave (y), then the probability that there’s a grue (z) near the person is 0.8.” Most of what is going on this statement is predicate logic, not probability. Remember that for probability theory, propositions are opaque and atomic. As far as it is concerned, “person(x) ∧ cave(y) ∧ in(x, y)” is just a long name for an event that is either observed or not; and so likewise “∃z: grue(z) ∧ near(z,x).” It can’t “look inside” to see that we’re talking about three different things and their relationships.

In practice, probability theory is often combined with other mathematical methods (such as predicate logic in this case). Probabilists mostly don’t even notice they are doing this. When they use logic, they do so informally and intuitively.

The danger is that they imagine probability theory is doing the work, when in fact something else is doing the heavy lifting. This can lead to logical errors. That is common in scientific practice: the probabilistic part of the reasoning is carried out correctly, but it is “misapplied.” In routine science, “probabilistic reasoning” is usually “we ran this statistics program.” “Misapplied” means that the program was run correctly, but due to a logical error, the results don’t imply what the users thought.

Another danger is sometimes-dramatic over-estimation of what probability theory is capable of. The mistaken idea that “probability theory generalizes logic”17 led to some badly confused work in artificial intelligence, for instance. More importantly, it has also warped some accounts of the philosophy of science.

Probabilistic logic

Probability theory can’t help you reason about relationships, and that is certainly an important part of rationality. So, probability theory is not a complete theory of rationality—one of the main points of this essay.

Predicate calculus can help you reason about relationships; but by itself, it can’t help you reason about evidence. Probability, in the Bayesian interpretation, is a theory of evidence. Can we combine them to get a complete theory of rationality?

“∀x: P(boojum(x)|snark(x)) = 0.4” is a statement of predicate calculus—not probability theory. As written, it is either true or false. But, maybe you aren’t sure which! In that case, you may have a probability estimate for it. And you might want to update your probability estimate given evidence.

P(∀x: P(boojum(x)|snark(x)) = 0.4 | snark(Edward)∧boojum(Edward)) = 0.8

“Given the observations that Edward is both a snark and a boojum, I now think it’s 0.8 probable that the statement ‘the probability that any snark is a boojum is 0.4’ is true.”

What exactly would that mean? How would you use it? What would that say about the probability of a particular snark being a boojum? What happens in the 0.2 probability that the statement is not true? In that case, what does it say about the probability of a particular snark being a boojum? Suppose the probability is actually 0.400001, not 0.4? Does that make the statement false?

The formula freely mixes probability theory with predicate calculus, nesting them any-which-way. There’s a P inside the scope of a ∀ inside the scope of a P. How does that work?

It turns out that, in general, no one yet has an answer to this. The field of probabilistic logic concerns the question. So far, various restricted probabilistic logics have been studied, which do not allow freely mixing probabilities and logical quantifiers. Even these restricted versions can get extremely complicated, and a general theory is currently out of reach.18

Formal systems, rationality, and epistemology

Even if we could fully integrate probability theory and predicate calculus, together they would be far from a complete theory of rationality. They have no account of what the various bits of notation mean, and where they come from. What is “father,” and how did you come up with the idea that ∀x: vertebrate(x) → (∃y: father(y, x)) even might be true?

Logical positivism was the dream that by writing things out precisely enough, in enough detail, we could get answers to such questions. It conclusively failed. The problem is that formalism necessarily depends on intelligent interpretation-in-action to connect it with the real world; to give it “aboutness.”19

Formal systems (such as logic and probability theory) are also only useful once you have a model. Where do those come from? I think it’s important to go about finding models in a rational way—but formal rationality has nothing to offer. (I wrote about this in “How to think real good.”)

As a further point, a complete theory of rationality—if such a thing were possible—would probably not be a complete theory of epistemology. We have very little idea what a good epistemological theory might look like, but my guess is that rationality (even in the broadest sense) would be only a small part.

Since we don’t know how people know things (the subject of epistemology), we should try to find out—empirically, and rationally! Armchair speculation has been seriously misleading. (Basing epistemology on an over-simplified fairy-tale version of Newton’s discovery of gravitation—a popular starting point—is not an empirical or rational approach, and reliably fails.) We need to actually observe people using knowledge, finding knowledge, creating knowledge. Only after much observation could we develop and test hypotheses.

In the 1980s, the “social studies of science” research program began to do this. Unfortunately, much of that was postmodern nonsense. It was marred by metaphysical and political axes that many of its practitioners wanted to grind, plus lack of understanding of the subject matter in most cases. This led to the “Science Wars” of the 1990s, culminating in the Sokal hoax, which pretty nearly killed off the field.

But this program did some valuable observational work, and made some interesting preliminary hypotheses. I’d like to see a return to careful observational study of how science (and other knowledge-generating activities) are done—this time without the ideological baggage.

Here’s one valuable generalization that came out of “social studies of science.” As I pointed out earlier, mathematical formulae are only given “aboutness” by people’s skilled, interpretive application in practical activity. The same is true of knowledge in general!

Further, most human activity is collaborative. It turns out that sometimes what I know cannot be separated from other people’s ability to make sense of it in relationship with particular situations. Making sense of knowing requires an account of the division of epistemic labor. Knowledge is often not a property of isolated individuals.20

Taking these points seriously leads to radical revisions not only in the sorts of explanations that could plausibly be part of an adequate epistemology, but also in the sorts of things it would need to explain.

Historical appendix: Where did the confusion come from?

E. T. Jaynes’ Probability Theory: The Logic of Science appears to be the root source. He was completely confused about the relationship between probability theory and logic.21 There’s strong evidence that when people tried to de-confuse him, he pig-headedly refused to listen.

He wrote that probability theory forms the “uniquely valid principles of logic in general” (p. xx); and:

Our theme is simply: Probability Theory as Extended Logic. The new perception amounts to the recognition that the mathematical rules of probability theory are not merely rules for calculating frequencies of “random variables”; they are also the unique consistent rules for conducting inference (i.e. plausible reasoning) of any kind. (p. xxii)

This is simply false, as I’ve explained in this essay. How did he go wrong?

He got confused by the word “Aristotelian”—or more exactly by the word “non-Aristotelian.”

Aristotelian logic has two truth values, namely “true” and “false.” In the 1930s, there was a vogue for “non-Aristotelian logic,” which added other truth values. For example, a statement could be “meaningless” rather than either true or false. Non-Aristotelian logic turned out to be a dead end, and is a mostly-forgotten historical curiosity.

Predicate calculus is not Aristotelian logic, but it is not “non-Aristotelian”, either! It has only two truth values.

What’s confusing is that Aristotelian logic was extended in two different dimensions: by adding truth values (to produce non-Aristotelian logic) and by allowing nested quantifiers (to produce predicate calculus).

When someone tried to explain to Jaynes that probability theory only extends Aristotelian logic, not predicate calculus, he remembered the phrase “non-Aristotelian logic” and read about that, and (rightly) concluded it was irrelevant to his project. Then when the someone said “no, you missed the point, what matters is predicate calculus,” Jaynes just dug in his heels and refused to take that seriously.

There are several places in his book where he says this explicitly. There’s a long discussion in the section titled “Nitpicking” (p. 23). It’s an amazing expression of defiantly obstinate confusion. Well worth examining to learn linguistic signs that you are refusing to see the obvious: out of arrogance, or because you half-realize that accepting it would collapse your grandiose Theory Of Everything.

There’s another refusal on page xxviii:

Although our concern with the nature of logical inference leads us to discuss many of the same issues, our language differs greatly from the stilted jargon of logicians and philosophers. There are no linguistic tricks and there is no “meta-language” gobbledygook; only plain English… No further clarity would be achieved… with ‘What do you mean by “exists”?’

Predicate calculus is the standard “meta-language” for mathematics, and getting clear about what “exists” means was Frege’s central insight that made that possible.22

Jaynes is just saying “I don’t understand this, so it must all be nonsense.”

A Socratic dialog

Pop Bayesian (PB): Wow, I have a faster-than-light starship! [A complete theory of rationality.]

Me: That seems extremely unlikely… how does it work?

PB: It’s about five inches long and has pointy bits at one end. Look!

Me: That’s a fork. [That’s a minor generalization of propositional calculus.]

PB: No, it’s totally an FTL starship! [A complete theory of rationality!]

Me: No, it’s not.

PB: Yes it is!

Me: Look, a minimum requirement for an FTL starship is that it go faster than light. [A minimum requirement for a general theory of rationality is that it can do everything predicate calculus and everything probability theory can do.]

PB: Yeah, look! I can make it go way fast! *Throws the fork across the room, really hard.*

Me: Uh… I don’t think you understand how fast light goes [how much more powerful predicate logic is than propositional logic].

PB: I can make it go however fast! I could shoot it out of a gun, even!

Me: Um, you don’t seem to know enough physics to understand the in-principle reason you can’t accelerate ordinary objects to FTL speeds. [If you can mistake probability theory for a general theory of rationality, you must be missing the mathematical background which you’d need in order to understand why it’s a non-starter.]

- 1.Predicate calculus is still more computationally expensive; in fact, it is provably arbitrarily expensive. I’m not advocating it as a general engineering approach to rationality either.

- 2.Or maybe not. Since propositional calculus does not let you talk about anything, its “therefore” really isn’t the same as a common-sense “therefore.” Some people think this is important, but it’s not relevant to this essay, so let’s move on.

- 3.E. T. Jaynes did not understand this. He was badly confused here already. He failed to understand the relationship between propositional and Aristotelian logic, much less the more complicated relationship between Aristotelian and predicate logic. In Probability Theory: The Logic of Science, p. 4, he claims to explain what a syllogism is, but his explanation is actually of modus ponens! Modus ponens is an operation of propositional logic, whereas the syllogism requires Aristotelian logic, i.e. a single universal quantifier. Jaynes did not see the distinction between the two; a very basic error.

- 4.If the events are not independent, it’s still possible to calculate probabilities, but more complicated.

- 5.In fact, Cox pointed this out in his 1961 book The Algebra of Probable Inference, quoting Boole in Footnote 5, p. 101. In this passage, Boole not only makes the connection between the frequentist and logical interpretations of probability, he suggests that it is necessary—which is the point of Cox’s Theorem.

- 6.In the Bayesian interpretation, anyway.

- 7.An important exception is quantum mechanics, which can be seen as an extension of probability theory in which probabilities can be negative or complex numbers. (Thanks to John Costello (@joxn) for pointing this out.) It is not “like” probability theory in Cox’s sense, and not useful as a way of reasoning about macroscopic uncertainty, but is of great practical (engineering) and theoretical (physics) importance.

- 8.This might seem obvious, but small children can’t do it reliably.

- 9.Or, more accurately, you can subitize them.

- 10.Technically, “this” is an indexical. Mid–20th-century logicians realized that indexicals allow for implicit universal quantification—when combined with some method for instantiating, or determining the reference of, each indexical. They didn’t have much of a story about the method. One of the central innovations of the Pengi system I built with Phil Agre was using (simulated) machine vision to bind indexicals to real-world objects.

- 11.Technically, this is not exactly what “Aristotelian logic” means; I’m skipping some fiddly details that are only of historical interest, and not relevant to this discussion.

- 12.Here “I” and “that” are indexicals, which give implicit quantification, but “some” is an explicit quantifier. An explicit formalization of this situation requires nested quantification, and you can’t get that with just indexicals, even implicitly.

- 13.An accurate account of nested quantification was the key to modern logic. It was developed by Gottlob Frege. To quote Wikipedia: “In effect, Frege invented axiomatic predicate logic, in large part thanks to his invention of quantified variables, which eventually became ubiquitous in mathematics and logic, and which solved the problem of multiple generality. Previous logic had dealt with the logical constants and, or, not, and some and all, but iterations of these operations, especially ‘some’ and ‘all’, were little understood: even the distinction between a sentence like ‘every boy loves some girl’ and ‘some girl is loved by every boy’ could be represented only very artificially, whereas Frege’s formalism had no difficulty… A frequently noted example is that that Aristotle’s logic is unable to represent mathematical statements like Euclid’s theorem, a fundamental statement of number theory that there are an infinite number of prime numbers. Frege’s ‘conceptual notation’ however can represent such inferences.”

- 14.Let’s simplify this to natural, biological fatherhood, ignoring issues of legal parenthood and laboratory genetic manipulation.

- 15.Formally, we bind x to Edward and instantiate to get vertebrate(Edward) → (∃y: father(y, Edward)), and then apply modus ponens (implication elimination) to get ∃y: father(y, Edward).

- 16.A few months after writing this, I learned about Tremblay’s salamander, an all-female species with no fathers. They are triploid, and reproduce only by self-fertilization. I read about Tremblay’s salamander in Randall Monroe’s What If?: Serious Scientific Answers to Absurd Hypothetical Questions which is full of fascinating factoids of this sort.

- 17.It’s worth noting that whereas most of what you can do with logic, you can’t do with probability theory, everything you can do with probability theory, you can do with predicate calculus. You can easily axiomatize probability theory, and thereby embed the whole thing in predicate calculus.

- 18.I find this somewhat surprising, actually, and have been tempted to dive in and see if I can solve the problem. But, looking at some examples of reasoning about probabilities of probabilities helps one see, quite quickly, why this is hard. It’s difficult to know what they mean. Interestingly, Jaynes worked on this, developing a formalism he called Ap, which I’ve discussed elsewhere. Ap handles only the very simplest cases (and is probably unworkable in practice for even those), but it does give some insight.

- 19.Wittgenstein, who was partly responsible for logical positivism in the first place, diagnosed this failure clearly in his Philosophical Investigations. Heidegger presented much the same insight earlier in Being and Time, although not as clearly, and not with reference to logical positivism specifically.

- 20.This means that the representational theory of mind, which descends from logical positivism, is unworkable. See “A billion tiny spooks.”

- 21.He was heavily influenced by Cox’s work, which he entirely misunderstood. Cox was definitely not confused, and not to blame; he was explicit that he was discussing only the propositional calculus. Cox’s writing is delightfully informal; if you already know logic, his book is enormously entertaining. If you don’t know logic, the informality is liable to mislead you.

- 22.Part of the obstacle to understanding nested quantifiers had been Aristotle’s misunderstanding of the existential quantifier even by itself. If you want to geek out about the history of logic, you can read about this in “The Square of Opposition.”