I have intense mixed feelings about this:

E is the letter in today’s sciences of mind. E adjectives proliferate. Nowadays it is hard to avoid claims that cognition – perceiving, imagining, decision-making, planning and so on – is best understood in E terms. The list is long, including: embodied, enactive, extended, embedded, ecological, engaged, emotional, expressive, emergent and so on.

E-approaches propose that cognition depends on embodied engagements in the world. They rethink the alternative, ‘sandwich’ view of cognition as something pure that can be logically isolated from non-neural activity. Traditionally, cognition is imagined to occur wholly within the brain. It occurs tidily in-between perceptual inputs and behavioral outputs. Cognition is informed by, and shapes, non-neural bodily engagements, but cognition and embodied interactions never mix.

A wealth of empirical findings has motivated various challenges to this traditional view. Experimental work encourages the idea that cognition may depend more strongly and pervasively on embodied activity than was previously supposed. Cognition may depend to a considerable extent and might even be constituted by the ways and means that cognizers actively and dynamically interact and engage with the world and others over time.

Those are the opening paragraphs of an excellent 2015 summary article, “How Embodied Is Cognition?”.1

I wrote a practically identical paper in 1986 with Phil Agre, “Abstract Reasoning as Emergent from Concrete Activity.”2 I can’t find it on the web, so I’ve included the full text below.

Old Man Yells At Cloud: “Goddammit, I told you so thirty years ago!” But you don’t care about that.

Rather, these ideas matter now: as a conception of what human beings are, and what we can potentially be, which is radically different from the mainstream philosophical tradition. If they are right, they have significant implications for how society, culture, and our selves may develop in the near future. That is what I now call “the fluid mode.” I plan to write about this connection soon, and will refer back to this paper then.

My mixed feelings: I’m glad that, thirty years later, these ideas are getting much better known. I’m annoyed that cognitive “science”—a mistaken, unscientific ideology of meaningness—has continued to exert a harmful, distorting influence on our understanding of ourselves in the meantime. I will say a little about that in an afterword at the end of this web page.

First, though, the paper. Here it is, verbatim, except I reformatted the footnotes. Also, I’ve included some minor commentary in the body of the paper, in [square brackets and italics].

Abstract

We believe that abstract reasoning is not primitive, but derived phenomenologically, developmentally, and implementationally from concrete activity. We summarize recent advances in the understanding of the mechanisms of concrete activity which suggest paths for exploring the emergence of abstract reasoning therefrom.

We argue that abstract reasoning is made from the same building blocks as concrete activity, and consists of techniques for alleviating the limitations of innate hardware. These techniques are formed by the internalization of patterns of interaction between an agent and the world. Internalization makes it possible to represent the self, and so to reflect upon the relationship between the self and the world. Most patterns of abstract thought originate in the culture. We believe that ideas such as plans, knowledge, complexity, understanding, order, search, and forgetting are learned. We present examples of everyday planning and analyze them in this framework. Finally, we describe cognitive cliches, which we take to be the most abstract mental structures.

Program

We want to understand the emergence of abstract reasoning from concrete activity. We believe that abstract reasoning is not innate, but derived from concrete activity, in three senses: phenomenologically, developmentally, and implementationally. We hope that an understanding of the first two senses can guide the development of an understanding of the third.

Heidegger argues that the phenomenologically primordial way of being is involvement in a concrete activity.3 Everyday activity consists in the use of equipment for a specific reason. When this activity is going well, it is “transparent.” An experienced driver does not have to think about driving, he just does it; and he can be doing something else, possibly requiring abstract thought, at the same time. It is only when there is a breakdown in the activity that abstract thought is needed. Only when I have too many things to do do I need to make a plan or schedule, to wonder what order to do them in, how they can be most efficiently combined. And it is only when that too fails that I might give up, become curious, and reflect theoretically about scheduling algorithms.

Several branches of psychology suggest that abstract reasoning appears developmentally later in individuals than involvement in concrete activity. Jean Piaget’s stage theory of cognitive development traces development from the beginning of infancy, in which there are only blind stimulus/response reflexes with no abstraction, representation, or reasoning.4 “Formal operations”—fully abstract reasoning—appear only around puberty. Psychoanalytic developmental psychology (particularly object-relations theory as found in D. W. Winnicott) sees the infant as a chaotic mass of impulses and fantasies leading directly to action.5 The infant has no self-representation and so is undifferentiated from the world. Gradually, scattered mental elements are integrated to produce adult rational thought. Crucial to the process of integration is internalization: getting control over interactions with the environment by bringing them inside yourself. Internalization leads to decreased egocentricity. By representing the relationship between his self and the world, a person is better able to be detached from it. Detachment is never complete; the ability to distinguish self from other, fantasy from reality, continues to develop throughout life.

[The following paragraph did not appear in the published version of the paper. I’ve derived this web version from the LaTeX source file, which includes notes to myself and some deleted material. A note here says I removed it as “anthropologically controversial.” I’m not sure whether the substance is still anthropologically controversial. The word “primitive” is probably politically incorrect, though.]

There is another way in which abstract reasoning is developmentally derived from concrete activity. Abstract reasoning may be a historically recent development, and culturally specific. People in primitive cultures do not engage in such reasoning, at least not to the same extent and not in as sophisticated ways.

The third sense in which we believe abstract reasoning is derived is implementationally. Constructivism is the position, held by many developmental psychologists, that human development occurs roughly in stages, and that each stage is built upon the previous one. We believe that abstract reasoning appears late because it is emergent from, or parasitic upon, concrete activity. We want to understand the nature of this emergence relationship. How, even in principle, can abstract thought be mechanistically derived from simple action?

Concrete activity

Study of the emergence of abstract reasoning must begin with an understanding of concrete activity. We believe that the beginnings of such an understanding are found in Gary Drescher’s work on the machinery to implement infant sensorimotor learning and in Agre’s work on that needed to implement adult routine activity.

Agre’s theory of routine activity describes adult action when everything is going well: the fluent, regular, practiced, unproblematic activity that makes up most of everyday life.6

The theory includes an account of the innate hardware, which we believe is, loosely, connectionist. It also includes a description of the sorts of cognition and activity that are most appropriate to that hardware. It turns out that the sort of computation most appropriate to the hardware is also the primary sort of computation that actually transpires in everyday life, namely concrete activity.

Routine activity is situated: it makes extensive use of the immediate surrounds and their accessibility for observation and interaction. Rather than using internal datastructures to model the world, the world is immediately accessed through perception. Much of the theory is concerned with the emergence of routines, which are dynamics of interaction: patterns of activity that occur not as a result of their representation in the head of the agent, but due to a Simon’s-ant7 relationship to a complex environment. The world and the mind interpenetrate.8 Routine activity is conducted in a society of other people, who provide a variety of types of support, from helping when asked to transmitting cultural techniques for solving classes of problems. Routine activity is concerned with the here-and-now; it rarely requires planning into the future, reflection upon the past, or consideration of spatially distant causes. It rarely involves thinking new thoughts; for the most part existing patterns of activity suffice to cope with the situation. Finally, routine activity takes the forms most natural to the hardware: those that can be implemented efficiently on a connectionist architecture.

The architecture we propose to support routine activity consists of a very large number of simple processors. The connectivity of the processors is quasi-static, meaning that the cost of creating a new connection between processors is very high relative to that of using an existing connection. The connections pass tokens from a small set of values, rather than real-valued weights, as in most connectionist learning schemes.

Such an architecture can represent abstract propositions, if at all, only by adding a layer of interpretation which is very computationally expensive. We think that indexical-functional representations are used instead. These are fully indexical representations which can be evaluated relative to the current situation very cheaply by virtue of their grounding in sensorimotor primitives. They can be thought of as definite noun phrases: an indexical-functional representation picks out, for example, the cereal box or the tea strainer from the visual field. The indexicality of such representations is mostly a matter of egocentricity. The cereal box implicitly means the cereal box in front of me. Compare developmental psychology’s description of child thought as egocentric.

Indexical-functional representations can’t involve logical individuals or variables; the hardware cannot reason about identity. Traditional AI representations use variables which are bound to constants which in turn are somehow connected by the sensory system to objects in the real world. Each constant is connected to a distinct real world object. So, for example, we can say that all bowls are containers, and instantiate this on bowl-259. If the bowl-259 is seen later, it will be recognized as the same one. For indexical-functional representations, things that look the same are the same. All you can do is see if there is a bowl there or not. There is no way to represent permanent objects in Piaget’s sense.

Another consequence of quasi-static connectivity is that introspection is very expensive: you can’t examine your own datastructures unless you built them with extra connections and processing elements for the purpose.

The theory of routines is incomplete; it describes adult mental architecture without explaining how that architecture came about. Drescher gives a theory of sensorimotor development motivated to fine levels of detail by Piaget’s observations of the early stages of infant development.9 He proposes hardware to support the emergence of the necessary software to account for the first five stages.

Drescher’s architecture, the Schema Mechanism, is quasi-static. It consists of very large numbers of items and schemas, which respectively represent properties of the world and the effects of actions in situations. Items are boolean-valued representations of conditions in the world. Schemas are indexical assertions that if certain items hold, after a given action other items will hold. The architecture initially has only sensory primitives as items and no schemas. Various learning techniques create new schemas to represent observed regularities, compound procedures for achieving states of items, and synthetic items, which represent conditions in the world which are not immediately observable. Some of these techniques collect empirical statistics to discover correlations. Implementation of the architecture is in progress.

Drescher presents a hypothetical scenario of his architecture progressing through the first five stages of infant development. Initially, all the representations constructed are purely indexical, but eventually these are partly deindexicalized, so that there develop representations of objects independent of the sensory mode in which they are perceived and then of objects independent of whether they are seen. Similarly, actions are initially represented egocentrically, but come to be represented independent of the actor who performs them.

The emergence of abstract reasoning

Abstract reasoning uses abstract representations and performs detached search-like computations.

Abstract representations differ from concrete indexical-functional representations along several dimensions, and intermediates are possible. They are universally quantified and so capture eternal truths, independent of the current situation. They are general purpose: a very broad range of sorts of knowledge can be encoded in a uniform framework. They can involve variables which must be instantiated in use; this requires notions of identity and difference. They are compositional: sentence-like, in that the meaning of the whole depends on the meanings of the parts. Natural language spans the range from indexical-functional to abstract. Utterances such as “the big one!” (requesting a coworker to pass a hammer) encode indexical-functional representations; those such as “Everyone hates the phone company” are abstract.

By detached computation, we mean that the agent, presented with a situation and a goal, thinks hard about how to achieve the goal, develops a plan, and then executes the plan without much additional thought. Because the agent is detached from the world, he can not depend on clues in the world to tell him what to do, and he must reason about hypothetical future situations, rather than simply inspecting the current state of the world. Any sort of search in an internally represented state-space is a detached computation.

We believe that abstract reasoning uses the same hardware as concrete activity. It is computationally expensive (in general, and particularly on quasi-static hardware), and since it is not necessary for routine activity, it is resorted to only when routines break down, when reflection or problem-solving are required. Thus, we believe that abstract reasoning is perforated: it is not a coherent module that systematically accounts for all or even a class of mental phenomena. It is not a general-purpose reasoning machine, as it appears to be, but only a patchwork of special cases. (Later in the paper, we sketch a general theory of special cases.) It appears seamless because people are good at giving post-hoc rationalizations for their actions, but those rationalizations are not causally connected to the activity they purport to explain. Techniques are often abstract by virtue of depending only on the form, not the content, of the concrete problems they apply to.

We believe that abstract reasoning consists of a set of techniques, mostly culturally transmitted, for alleviating the limitations of quasi-static hardware to constrain the ways one organizes one’s activity. These techniques are implemented with the same building blocks used in routine activity, but interconnected in different ways, which generally run slowly and consume much hardware. The techniques mostly act to build static networks to routinely cope with some new type of situation: they compile abstract knowledge into machinery for concrete activity. This is similar to chunking in Soar,10 the use of a dependency network in Agre’s running arguments,11 the instantiation of virtual structures in the Schema Mechanism, and Jeff Shrager’s mediation theory.12

Though abstract reasoning is emergent from computation, there is no reason a priori to suppose that it is also computational. The nature of a system can change radically across an emergent-implementation boundary. For example, from quantum mechanics there emerge chemical systems, which are not quantum mechanical; from chemistry there emerge biological systems, which are not chemical; from biological and from electronic systems there emerge computational systems, which are not biological or electronic. In each of these cases, when we say that a a system is not of a certain sort, we mean that it is best described in other terms. A program can be reduced to a pattern of electrical impulses, but the only way it can be reasoned about in that framework is by blind simulation. Programs participate in emergent rules that allow us to reason about them efficiently and independently of their hardware implementation. We will not be surprised if the mind is similarly made of a stuff that is not computational, though it emerges from a computational medium. Psychoanalysis and anthropology, to name just two disciplines, have made sophisticated but entirely non-computational models of mind-stuff.

[The preceding paragraph did not appear in the published paper. My note to myself was “I got too much flak about this”—from readers of draft versions. I guess I’ll be surprised if I don’t get flak again now.]

Internalization

The content of routine activity is not innate; it must be learned. The Schema Mechanism provides one account of this development; we don’t know if it is sufficient. But apart from the hardware required, we can study the dynamics of skill acquisition: in what way is it learned?

The problem is in a way more difficult than earlier approaches such as Sussman’s13 suggest, because most interesting activity arises not from the execution of plans, which are accessible datastructures, but from interactional routines, which do not derive from explicit representations. You can, and typically do, participate in a routine without being aware of it. So the first step of improving a routine is to make some aspect of it explicit. As each new aspect of a routine is represented, it can be modified. This process rarely terminates in a complete procedural representation.

The making explicit of routines is a good candidate for the mechanistic correlate of the psychoanalytic concept of internalization. Agre’s “Routines” gives this example of internalizing a routine:

An everyday example is provided by the myopic vacuumer of dining rooms who hasn’t thought to describe the process as one of alternating between vacuuming and furniture-moving. Explicitly representing that aspect of the vacuuming process should make one think to move all the furniture before getting started…. Vacuuming is often best characterized as interleaving the processes of moving the furniture, running the vacuum around, and returning the furniture. Sometimes it is better to deinterleave these processes.

We don’t know how internalization happens in general. For lack of a better idea, we reluctantly suspect that a brute-force statistical induction engine is involved. However, something like Dan Weld’s aggregation would be very useful in detecting loops such as the vacuuming one.14 Unfortunately, known aggregation algorithms do not scale well with the complexity of the input, and probably will fail in the face of the richness of the real world.

A strong clue that a cyclic process is occurring is a rhythm. The Schema Mechanism can be extended with hardware for rhythm detection. Add to each item hardware which keeps a history of the last several changes in value of the item and computes the variance of the Δt. If the variance is small, a synthetic item can be created to represent the rhythmicity of the given one, and the state of the synthetic item can be maintained in hardware. These synthetic rhythm items themselves can have rhythm detectors, so that more complex rhythms can be detected. How useful such hardware would be remains to be seen.

One result of internalization is the construction of the mind’s eye. Imagine that parts of the central system can gain write access to the wires on which the outputs of sensory systems, at various levels of abstraction, are delivered to the central system. Then one part of the central system can induce hallucinations, and another part will do something based on what it implicitly supposes are inputs from the world. In order to avoid actually acting in the imaginary situation, it must also be possible to short-circuit outputs to the motor system. In this way, the ability to consider hypothetical situations begins. If the situation is well-enough understood, the results of imaginary actions can be hallucinated. Iterated, this permits the internalization of routines. The resulting internal representation of a routine is another routine which simulates it, interacting with the sensorimotor interface, rather than the real world. This is, we think, the mechanistic correlate of the introspective phenomenon of visual imagery. The mind’s eye extends to all sensory modalities and to motor actions. Constructed similarly is the internal dialog, the sentences we say to ourselves silently. Computation in this internal world is just like computation in the real one; it is just as concrete and situated, except that the situation is imaginary.

We suspect that early language acquisition is intimately tied up with the first development of abstract thought. Natural language provides a bridge between indexical-functional and abstract representations. Children’s first utterances are single words: “ball!” “Mummy!” “hungry!” The production of these highly-indexical “observatives” might very well be directly driven by indexical-functional representations. There follows a long two-word stage, producing utterances like “give ball,” “more cookie,” and “bad kitty.” There is no real syntax to these utterances, but there are a dozen or so different kinds of semantic relationships between the two words to be mastered.15 The compositionality makes even such simple sentences less than fully indexical. Production of such utterances both requires and drives the development of abstract thought.

Reflection and the self

Brian Smith, in “Varieties of Self-Reference,”16 makes a useful distinction between introspection (thinking about yourself by virtue of having access to your own datastructures) and reflection (thinking about the relationship between yourself and the world). Introspection has traditionally been thought necessary for reflection.

The construction of the mind’s eye gives you partial introspective access in a systematic way. You can observe what you would do in hypothetical situations, but without access to the machinery that engenders the action, you won’t know quite how or why. By varying the imaginary situation, you can induce a model of your own reasoning. This model, however, can only be as good as your ability to simulate the world’s responses to your actions, and so must constantly be checked against experience.

Routine activity does not require reflection; the necessary computation just runs. Moreover, quasi-static hardware makes introspection very expensive. We suspect, therefore, that reflection is not only not used in concrete activity, but also is little used in problem solving. It is primarily useful in long-term development: a relatively crude self-model may be good enough to base long-term planning on. This is compatible with the psychoanalytic understanding of self-models, used in determining basic values and orientations, incomplete, inexact, and in pathological cases completely unlike the self that is modeled.

We believe that reflection, rather than being based on introspection, primarily “goes out through the world.” Just as concrete activity generally uses the real world as the model of the world, so reflection uses the world’s mirroring of the self as a model of the self. Smith gives the example of realizing that he is being foolishly repetitive at a dinner party and shutting up. Such a realization might possibly be made by examining one’s own actions, but might much more likely be cued by signs of boredom on the part of the audience. This again is consonant with psychoanalytic emphasis on the role of the mother in mirroring the infant’s facial expressions and gestures and, in later life, the role of the reactions of others in maintaining self-esteem.

Smith is concerned in “Varieties” with translation between the indexical representations needed for concrete activity and the abstract representations needed for reflection. He proposes the use of the self-model as a pivot in this translation. Deindexicalization consists in part in filling in implicit extra arguments to relations with the self. Thus, to take Smith’s example, the sensory item hungry can be used concretely to activate eating goals. But to reason about other people’s hunger, it must be deindexicalized to (hungry me), generalized to (hungry x), and perhaps instantiated as (hungry bear). Similarly, only by deindexicalizing the cereal box representation can cereal boxes in general, or cereal boxes remote in time or space, be considered.

Cognitive ideology; planning

The nature of abstract thought, unlike that of concrete activity, is determined by your culture. (The content of concrete activity is of course also culturally determined, but its form is determined by the innate hardware.) We use the term cognitive ideology to refer to culturally transmitted ways of organizing activity that involve ideas such as plans, knowledge, complexity, understanding, order, search, and forgetting.

We would like to provide an account of planning, an item of cognitive ideology, as a paradigm form of the sort of abstract reasoning we have sketched above. “AI and Everyday Life”17 argues that very little planning is done in daily life, and that such plans as are constructed are only skeletal, never more than a dozen steps. The latter is a good thing, because Planning for Conjunctive Goals18 shows that planning, even in the trivial sorts of domains considered in the AI literature, is computationally intractable.

The remainder of this section presents first-person anecdotes from the daily life of one of us (Chapman). These anecdotes illustrate sorts of activity that are not well accounted for by current AI planning theories and which must be explained either in giving a psychologically realistic account of human activity or in building a robot that acts in real-world domains. Our partial analyses hint at the form an account of planning in the framework of this paper might take.

Classical planning emphasized the means-ends relationship between plan steps and the discovery of order constraints among them based on interactions between pre- and postconditions. Observation of everyday planning leads us to believe that these sorts of reasoning are unusual. In general, the right way to achieve a goal is obvious. Pre- and postconditions are unknown, variable, too hard to represent accurately, or out of the agent’s control. Ordering decisions are generally made for quite other reasons.

When Planning for Conjunctive Goals was printed, I sent about sixty copies out to people who had asked for them. I set up an assembly line for putting copies in envelopes and addressing them. The Tyvek envelopes I use have gummed flaps with waxpaper over them; to make them sticky, you pull off the waxpaper, rather than licking the glue. So I pulled the waxpaper off an envelope, shoved a copy of the report in, and sealed the flap. The second time I tried, the glue stuck to the back of the report as it slid half-way in. I had to tear the report away from the flap, damaging both somewhat, and seal the envelope with tape. From then on, I put the reports in before pulling off the waxpaper. In this example, the two steps must be ordered, but it is hard to give an account of the reason in terms of preconditions. You could say that it’s a precondition for putting reports in envelopes that the flap not be sticky, but that isn’t really true; you can still do it if you’re lucky or careful. Such a precondition seems artificial, because it doesn’t tell you why you have to put the report in first. In any case, if there were such a precondition, I didn’t know about it when I started, though I think I had a pretty complete understanding of envelopes and tech reports. The problem was not in the static configuration of the pieces, but a dynamic emergent of their interaction.

Bicycle repair manuals tell you that when you disassemble some complex subassembly like a freewheel, put all the little pieces down on the ground in a row in the order you disassembled them. That’s because (if you know as much about freewheels as I do) the only available representation of the pieces is “weird little widgets.” They are indistinguishable from each other under this representation. Because quasi-static hardware can’t represent logical individuals, you can’t remember (much less reason about) which is which and what’s connected to what.

This story illustrates several themes of the paper. The manual’s advice is a piece of culturally transmitted metaknowledge that allows you to work around the limitations of your hardware in order to perform activity in a partly planned way. The plan—the order in which the freewheel should be reassembled—is not sufficient to completely determine the activity; you still have to be responsive to the situation to see just how each piece should be put back on. In fact, the plan is not even represented in your head, but externally, as a physical row of objects on the floor. Internalization of such an external representation may be the basis of our ability to remember lists of things to do.

I have a very large spice shelf, which until recently was total chaos. It occurred to me one day while fruitlessly searching for a jar of tarragon to alphabetize the shelf. In the course of doing so, I discovered a number of amazing things, among them that I had fourteen bottles of galangal.19 Galangal is not an easy spice to come by. I realized that every time I went to an oriental food store, as I do every few months, I would remember that difficulty and pick up a bottle. Using the culturally-given idea of alphabetizing, I was able to overcome my inability to introspect about my spice-buying routines. Since this routine (buying a jar of galangal every time) was certainly nowhere explicitly represented, introspection would not have helped even had my hardware supported it. Only if I thought to simulate several cycles of oriental-food-store shopping and could do so accurately (both unlikely) could I have discovered the bug.

Finding a spice jar on an alphabetized shelf is a search in the world, rather than in the head. Internalization of such searches produces the internal searches of which AI currently posits so many.

Sometimes planned action is fluid and routine. I have observed in more detail than belongs here the way I cope with the routine situation of coming through the front door of my house with a load of groceries on my bicycle, while wearing heavy winter clothing. Immediately after opening the door a dozen actions occur to me. This may seem like a lot; because we know how to cope with it, we underestimate the complexity of daily life. These actions for the most part do not stand in a means-ends relationship to each other; they satisfy the many different goals which are activated as I come through the door.

[The following discussion interweaves phenomenological observations of an everyday-life routine with unimplemented technical proposals about how to model it. The technical bits assume understanding of the state of the art of AI action theory as of 1986. So, you may want to skip over all that to the next section. Or, you might find the phenomenology interesting; it’s probably understandable if you just ignore the technical bits.]

I can not do all these things at once. However, this situation is routine in the sense that it happens often and I know how to cope with it without breakdown: it is not routine in that it is sufficiently complex and sufficiently variable that I have to plan to deal with it; I don’t have a stored macrop (canned action sequence) for it.

I can plan routinely to deal with this situation because about twenty arguments about how to proceed immediately occur to me. These are just at the fringe of consciousness. I can make them conscious effortlessly, but typically they flash by many per second, so they aren’t fully verbalized in the internal dialog. The arguments concern the order in which the actions should be done. If we were to encode these arguments in a dialectical interpreter, nonlinear planning would fall out automatically. A set of arguments about action orderings effectively constitute a partial order on plan steps. The argument that A should be before B can simply be phrased as objecting to B, non-monotonically dependent on AI’s not having been done; then nonlinear planning and execution fall out directly.

Of the arguments, roughly half are what might be called necessity orderings. They are arguments about what order things must be done in in order to work at all. They don’t say, action A has prerequisite p, which is clobbered by action B, so do A first; like the envelope example, they are at a higher level. You have to take off your coat before putting it away because… well, because that’s just the way it is. I must have known the reason once; it just seems self-evident now; and certainly doesn’t have anything to do with preconditions.

Besides necessity orderings, there are optimization arguments. Some of these are orderings; some are in the vocabulary of quasiquantitative time intervals. For example, I should lock the door before leaving the front hall, or I will forget to do it once I’m out of that context. Similarly, I should sign up for grocery money spent as soon as possible, because I often forget to do so, but remember now. Here I am working to overcome my own future immersion in a concrete situation. The door should be closed (as opposed to locked) within about ten seconds, because it’s freezing cold out. Again, I need to take off my coat within about a minute, or I will get hot and sweaty.

In fact, it so happens that the set of orderings is cyclic: there is an argument that I should put my keys (which I am holding) in my pocket first thing, to make it possible to grasp my bicycle and push it through the door; but there is also an argument that I shouldn’t put the keys away until I’ve locked the door, which can’t happen until I’ve closed it, which can’t happen until I’ve pushed the cycle through. This registers phenomenologically as a minor hassle; I feel slightly annoyed. Like cycles in planning generally, it must be resolved by adding another step (a white knight): in this case, it was to put the keys in my pocket now and take them out again later.

These arguments don’t take into account the number of available manipulators. For example, I could take off my coat before putting the bicycle up against the wall where it belongs. There is in fact an argument for that: I want to get out of my coat as soon as possible, while it doesn’t matter just when the cycle gets put away. However, I need about one and a half hands to hold up the bike (with its destabilizing load of groceries), so it would be difficult to take off my coat first. Introspectively, I don’t reason about hand allocation as I build the plan; and when I went to set it down on paper, it became obvious why. It’s absurdly difficult. It’s a pain even for a linear plan; you have to keep track of what’s in each hand at every instant. In a nonlinear plan, it seems to be just about impossible.

So I don’t plan manipulator allocation ahead of time; it’s very easy to deal with during execution, because I can just sense what is in my hands at any given instant. This is another example of the immediacy of the world making reasoning easy. Part of the reason this planning is routine and breakdown-free is that it happens that I never run out of hands. This is a contingent, emergent fact about the structure of the world and my ability to plan and our interactions. I couldn’t make the optimal decision in all cases, but I can do well enough in this one. To prove the former point, on the couple of occasions on which the phone has rung as I came in through the door, I experienced a breakdown. I left the door open and it got real cold in the front hall and my bicycle ended up on the floor with groceries spilling out of it and I left my coat on and I ended up feeling upset.

Cliches

Cognitive cliches are Chapman’s theory of the most abstract structures in the adult mind.20 These structures are substantially less general than, for example, predicate calculus formulae with a theorem prover. Cognitive cliches support only

A cognitive cliche is a pattern that is commonly found in the world and, when recognized, can be exploited by applying the intermediate methods attached to it. The flavor of the idea is perhaps best conveyed by some examples: transitivity, cross products, successive approximation, containment, enablement, paths, resources, and propagation are all cognitive cliches. In general, a cognitive cliche is a class of structures which are components of mental models, occur in many unrelated domains, can be recognized in several kinds of input data, and for which several sorts of reasoning can be performed efficiently. Planning for Conjunctive Goals describes the possible application of cliches to classical planning. If a problem can be characterized in terms of certain cliches, otherwise intractable planning problems can be solved by polynomial intermediate methods.

Developmentally, cliches are abstracted from concrete competences in specific domains. Ideally, instances of, say, cross-product in many domains would be analogically related and the commonalities abstracted into a single coherent, explicitly represented intermediate-competence module. In fact, it seems likely that not all instances of cross-products are recognized as such, and that several clusters of them might join together, creating subtly different intermediate competence. This competence might not be as general in application as ideally it could be, yet still be useful.

What sort of routine activity might cognitive cliches be abstracted from? We suspect that the most basic, central cliches, the naive mathematical ones such as cross-product and ordering, are abstracted principally from the visual routines described by Shimon Ullman.21 In thinking about naive-mathematical cliches, we have a strong sense of visual processing being involved. When we think about cross products, we think of a square array or grid; when we think of an ordering, we think of a series of objects laid out along a line. We suspect that these cliches have been abstracted from visual routines for parsing just such images. There is a specific visual competence involved in looking at a plaid fabric, which is the ability to follow a horizontal line, then change to the vertical, and scan up or down, thus reifying the cross point. The internalization of this visual routine and others like it is the ability to see an array in the mind’s eye and similarly to scan either axis. This ability may serve as the nucleus for the development of all the naive-mathematical competence surrounding the idea of cross-products.

Visual routines are procedures for parsing images that are dynamically-assembled from a fixed set of visual primitives. By Ullman’s account, visual routines are compiled from explicitly represented procedures, but they might also be non-represented routines in our sense. We also suppose that oculomotor actions, as well as early vision computations, might be primitives in visual routines. Visual routines are used for intermediate-level vision: the primitives are too expensive to be computed locally over an entire image (as early vision computations are). The routines are executed in response to requirements of a late-vision object-recognition competence, which are too various to build into hardware. The internalization of visual routines results in a mind’s eye that is very far from an array of pixels, but rather consists of just enough machinery to simulate outputs from the visual routine primitives.

Conclusion

This paper summarizes portions of a paper, “AI and Everyday Life” we are writing about an emerging view of human activity. The new view starts from the concrete and uses an understanding of it to approach the traditional problems of abstract reasoning. This view is shared, at least in part, by many researchers who have come to it independently from very different perspectives. This gives us more confidence in its necessity. In most cases, researchers have reluctantly given up the traditional primacy of the abstract only in the face of some driving problem. Among these problems are programming a mobile robot, writing reflexive interpreters, deriving an ethnomethodological account of human-machine interaction, programming quasi-static computers, encoding complex concrete real-world problems, working around the computational intractability of classical planning, and developing a computational account of what is known in psychology about human development. We believe that finding coherence among such diverse problems will provide a broad base for work in the new view.22

Afterword, 2017

This paper is part of a century-long conversation that re-thinks meaningness and what it is to be human.23 Some ideas in it were original to Agre and/or myself; but as the footnotes make clear, we took most from diverse disciplines outside of artificial intelligence.

There was an extraordinary flowering of this conversation in the San Francisco Bay Area in the mid-1980s, when isolated researchers in seemingly unrelated fields realized they were addressing the same issues in similar ways. A key sentence in the paper is: “This view is shared, at least in part, by many researchers who have come to it independently from very different perspectives.” The synergies produced rapid progress and startling results.

Five years later, the movement fizzled out, and the era seems to have been forgotten.24 Many, but not all, of our ideas have been rediscovered since, without awareness of this prehistory. “How Embodied Is Cognition?” dates the beginnings of the E- movement to the 1990s, but its central ideas were fully formed in 1986.

The fizzling was, as far as I can tell, due to a series of unfortunate historical accidents, rather than intellectual flaws. We each dropped the research program for reasons of personal circumstance, mainly.

“Abstract Reasoning as Emergent from Concrete Activity” may be the best overall summary of Agre’s and my joint work. (For a dramatically different, more recent overview, see “I seem to be a fiction.”) The paper was a presentation at a conference on models of action. I wrote it as an informal explanation of the conceptual framework for our research program, rather than as a report of results. As a conference paper, it was up against page limits, which may make it dense and difficult to follow.

All our other papers are available on the web, and have been cited several thousand times. This one seems to have been cited only twice. Presumably this is because the proceedings volume immediately disappeared into obscurity. Advice to young researchers: do not bury your most important work in a conference proceedings! It barely counts as an academic publication.

The Conclusion section promises a forthcoming paper “AI and Everyday Life: The Concrete-Situated View of Human Activity.” That was supposed to be the full-length version, with much more explanation, plus detailed reports on technical results. We never finished it. The book versions of Phil’s and my PhD theses were the most detailed accounts of our research, but they concentrated on technical results rather than the philosophical framework.

I evangelized the concrete-situated (“embodied, enactive, extended, embedded, ecological, engaged, emotional, expressive, emergent”) view at numerous conferences in the late 1980s. Some people got it; most didn’t. There was significant momentum for a while, but once we stopped pushing, cognitive “science” mostly reverted to its comfortable rationalist/representationalist ideology.

It seemed difficult to get the point across. I hope to do better now. Maybe there’s better explanatory technology available!

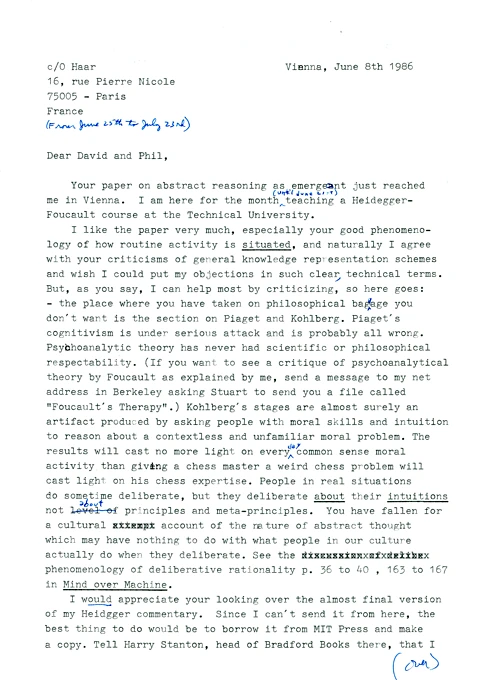

Special bonus: Bert Dreyfus’ comments on our paper

While packing for a move in 2022, I found a letter from Hubert Dreyfus to Phil and myself, kindly telling us that practically everything in the paper was wrong. Dreyfus was both the major English-language interpreter of Heidegger’s philosophy and the foremost critic of cognitivism, rationalism, representationalism, and AI research from the 1960s until his death in 2017. Our work was massively influenced by his.

It took me (at least) a few years to understand that his criticism here was entirely correct.

- 1.Daniel D. Hutto and Patrick McGivern, “How Embodied Is Cognition?”, The Philosophers’ Magazine, Issue 68, 1st Quarter 2015, pp. 77–83. I have edited the extract for clarity and concision, without (I hope) significantly altering its meaning. My thanks to Jayarava Attwood for pointing me to this paper.

- 2.It was a 1986 conference presentation, published in a 1987 edited version of the conference proceedings. The full citation: David Chapman and Philip E. Agre, “Abstract Reasoning as Emergent from Concrete Activity,” Reasoning About Actions and Plans, Michael P. Georgeff and Amy L. Lansky, eds., Morgan-Kauffman, Los Altos, CA, 1987, pp. 411-424.

- 3.Martin Heidegger, Being and Time (1962); Hubert Dreyfus, Being-in-the-world: A Commentary on Heidegger’s Being and Time, Division I (1990). [Dreyfus’s book was listed as “forthcoming” in in our paper; we had a photocopy of his pre-publication manuscript, and it was the single biggest influence on our work.]

- 4.Jean Piaget, The Origins of Intelligence in Children (1952) and Structuralism (1970).

- 5.D. W. Winnicott, Through Paediatrics to Psycho-Analysis (1975).

- 6.Philip E. Agre, “The Structures of Everyday Life,” MIT Working Paper 267, February 1985; “Routines,” MIT AI Memo 828, May 1985. Philip E. Agre and David Chapman, “AI and Everyday Life: The Concrete-Situated View of Human Activity,” in preparation. [We never finished that paper. A fuller account than the 1985 ones would be Agre’s 1997 Computation and Human Experience.]

- 7.[“Simon’s ant” needed no footnote in 1987. It is a parable from Herbert Simon’s seminal The Sciences of the Artificial (1969). The path an ant takes, when navigating rough terrain, may be enormously complex. However, the ant has no mental map of that path; it simply deals with local obstacles as it comes to them. The complexity is in the world, not in the ant’s brain.]

- 8.[“The world and the mind interpenetrate” is the essential insight of the “E-word movement.” Amusingly, I attached a one-word note to this sentence in the document source text: “Expand.” This is what rationalists/cognitivists/representationalists have the hardest time understanding. I don’t know if any finite expansion is sufficient to induce the meta-rational cognitive flip.]

- 9.Gary L. Drescher, The Schema Mechanism: A Conception of Constructivist Intelligence, unpublished Master’s thesis, MIT Department of EE and CS, 1985. “Genetic AI: Translating Piaget into LISP,” MIT AI Memo 890, 1986. [His 2003 Made-Up Minds: A Constructivist Approach to Artificial Intelligence covers continued work in the same framework.]

- 10.John E. Laird, Paul S. Rosenbloom, and Allen Newell, “Chunking in Soar: the Anatomy of a General Learning Mechanism,” Machine Learning, March 1986, Volume 1, Issue 1, pp 11–46.

- 11.Philip E. Agre, “Routines.” MIT AI Memo 828, May 1985.

- 12.Jeff Shrager, “(Cognitive) Mediation Theory.” Unpublished manuscript.

- 13.Gerald Jay Sussman, A Computational Model of Skill Acquisition (1975).

- 14.Daniel Sabey Weld, “The Use of Aggregation in Causal Simulation,” Artificial Intelligence, 30:1-34, October 1986.

- 15.Paula Menyuk, Sentences Children Use (1969).

- 16.Proceedings of the Conference on Theoretical Aspects of Reasoning About Knowledge, Monterey, California, March 1986.

- 17.[The paper cites “AI and Everyday Life” as “forthcoming,” but it wasn’t. More about that later.]

- 18.David Chapman, “Planning for Conjunctive Goals,” Artificial Intelligence 32 (1987) pp. 333-377. Revised version of MIT AI Technical Report 802, November, 1985.

- 19.[In retrospect, it seems possible that this claim was exaggerated.]

- 20.David Chapman, Cognitive Cliches. MIT AI Working Paper 286, February, 1986.

- 21.Shimon Ullman, “Visual Routines,” MIT AI Memo 723, June, 1983.

- 22.The paper ended with an Acknowledgments section: “Gary Drescher, Roger Hurwitz, David Kirsh, Jim Mahoney, Chuck Rich, and Ramin Zabih read drafts of this paper and provided useful comments. We would like to thank Mike Brady, Stan Rosenschein, and Chuck Rich for the several sorts of support they have provided this research in spite of its manifest flakiness. Agre has been supported by a fellowship from the Hertz foundation. This report describes research done at the Artificial Intelligence Laboratory of the Massachusetts Institute of Technology. Support for the laboratory’s artificial intelligence research has been provided in part by the Advanced Research Projects Agency of the Department of Defense under Office of Naval Research contract N00014-80-C-0505, in part by National Science Foundation grant MCS-8117633, and in part by the IBM Corporation. The views and conclusions contained in this document are those of the authors, and should not be interpreted as representing the policies, neither expressed nor implied, of the Department of Defense, of the National Science Foundation, nor of the IBM Corporation.”

- 23.The conversation begins, arguably, with Heidegger’s Being and Time, published in 1926, but begun several years earlier.

- 24.I hope to write more about it soon. Particularly, the central role of Lucy Suchman, who was uniquely able to cross disciplinary boundaries and explain different fields to each other.